Python的selenium利用Chrome的debugger模式采集小红书详情页

- wang

- 2024-03-01

- Python笔记

- 633浏览

- 0评论

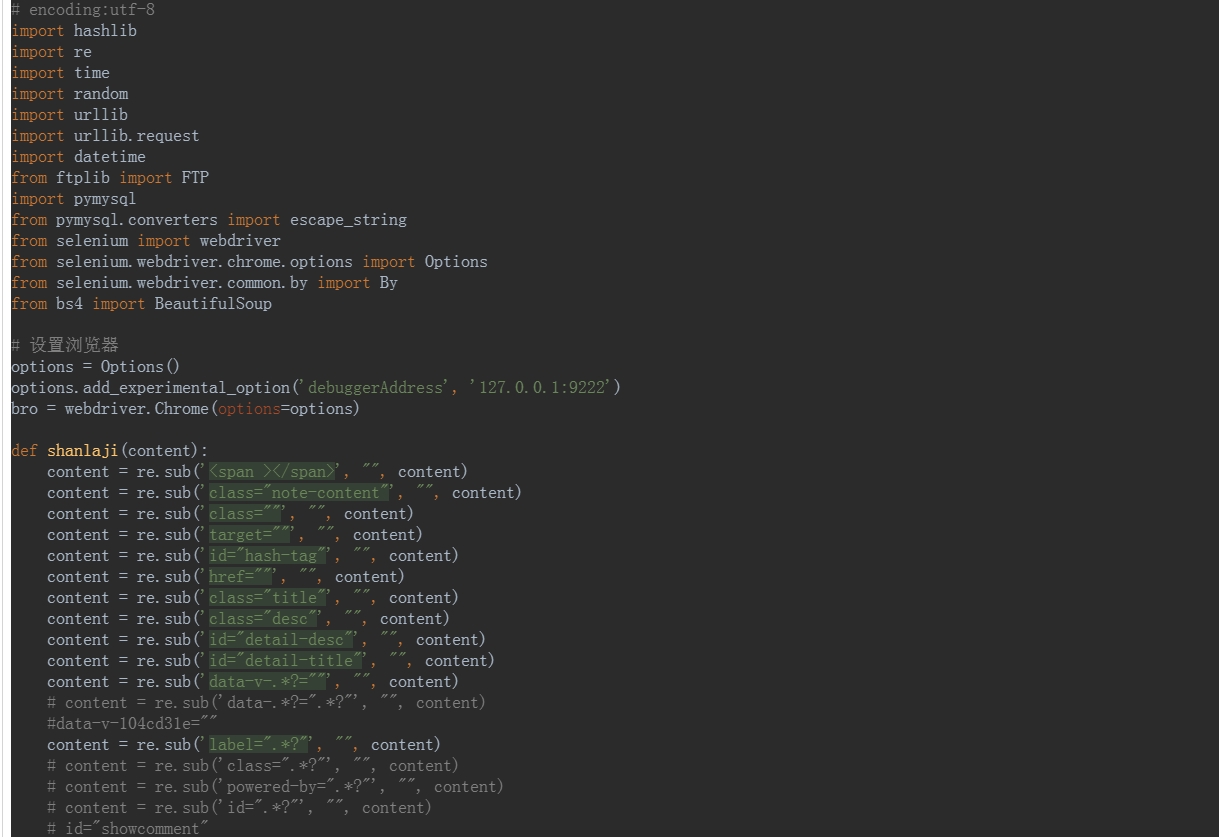

# encoding:utf-8

import hashlibimport re

import time

import random

import urllib

import urllib.request

import datetime

from ftplib import FTP

import pymysql

from pymysql.converters import escape_string

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

from bs4 import BeautifulSoup

# 设置浏览器

options = Options()

options.add_experimental_option('debuggerAddress', '127.0.0.1:9222')

bro = webdriver.Chrome(options=options)

def shanlaji(content):

content = re.sub('<span ></span>', "", content)

content = re.sub('class="note-content"', "", content)

content = re.sub('class=""', "", content)

content = re.sub('target=""', "", content)

content = re.sub('id="hash-tag"', "", content)

content = re.sub('href=""', "", content)

content = re.sub('class="title"', "", content)

content = re.sub('class="desc"', "", content)

content = re.sub('id="detail-desc"', "", content)

content = re.sub('id="detail-title"', "", content)

content = re.sub('data-v-.*?=""', "", content)

# content = re.sub('data-.*?=".*?"', "", content)

#data-v-104cd31e=""

content = re.sub('label=".*?"', "", content)

# content = re.sub('class=".*?"', "", content)

# content = re.sub('powered-by=".*?"', "", content)

# content = re.sub('id=".*?"', "", content)

# id="showcomment"

# class="weapp_text_link wx_tap_link js_wx_tap_highlight"

# label="Powered by 135editor.com"

# data-miniprogram-appid="wx1cf959f941c33f64"

return (content)

# ftp上传达到紫田服务器

def ftp_img(wangzhan, bendi_file, md5_name):

# print('上传img开始')

today = datetime.date.today()

today = str(today)

f_img = FTP('111111', timeout=1100)

f_img.login('ssd4', 'rAPDC6WzDf38YCjP')

try:

f_img.mkd(f'{wangzhan}')

except Exception as err:

pass

# print(err)

f_img.cwd(f'{wangzhan}') # 上传路径

try:

f_img.mkd(f'{today}')

except Exception as err:

pass

# print(err)

f_img.cwd(f'{today}') # 上传路径

try:

with open(bendi_file, 'rb') as file:

f_img.storbinary('STOR %s' % md5_name, file)

except:

time.sleep(5)

with open(bendi_file, 'rb') as file:

f_img.storbinary('STOR %s' % md5_name, file)

file.close()

# print('上传img结束')

f_img.quit()

print('上传img成功')

def page():

content=''

# 连接数据库

conn_weixin = pymysql.connect(

host='42.333333333',

user='2323322323',

password='sWEms5TKewFtnfiC',

database='232323',

charset='utf8mb4', )

cursor_weixin = conn_weixin.cursor(cursor=pymysql.cursors.DictCursor)

# 随机找一个关键词

sql = f'''select * from xiaohongshu_url where status=0 order by id desc limit 1 '''

cursor_weixin.execute(sql)

res = cursor_weixin.fetchone()

while not res :

print('没有数据了,等待5秒钟后再次尝试')

sql = f'''select * from xiaohongshu_url where status=0 order by id desc limit 1 '''

cursor_weixin.execute(sql)

res = cursor_weixin.fetchone()

#print(res)

url='https://www.xiaohongshu.com'+res['url'].replace('search_result','explore')

#print(url)

bro.get(url)

#print(22222)

#处理图片

imgs=bro.find_elements(By.CLASS_NAME,'swiper-slide')

img_urls=[]

for img in imgs:

style=img.get_attribute('style')

#print(style)

for i in style.split(";"):

#print(i)

if 'background-image' in i :

img_url=i.split('"')[-2]

#print('img_url:',img_url)

img_urls.append(img_url)

#print(img_urls)

img_urls = list(set(img_urls))

#print(img_urls)

for img_url in img_urls:

# print('img地址',src)

opener = urllib.request.build_opener()

opener.addheaders = [('User-Agent','Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1941.0 Safari/537.36')]

urllib.request.install_opener(opener)

new_img_name = str(res['wangzhan_id']) + "_" + str(time.time() + random.randint(1, 100)).replace('.','') + '.webp'

urllib.request.urlretrieve(img_url, new_img_name)

with open(new_img_name, 'rb') as f:

md5_name = str(res['wangzhan_id']) + "_" + hashlib.md5(f.read()).hexdigest() + '.webp'

# 上传到机房

ftp_img(res['wangzhan_id'], new_img_name, md5_name)

today = datetime.date.today()

today = str(today)

content=content+f'''<p style="text-align: center;" ><img src='https://img.taotu.cn/ssd/ssd4/{res['wangzhan_id']}/{today}/{md5_name}' ></p> '''

print('图片地址替换成功')

#print('content1:',content)

div1=bro.find_element(By.CLASS_NAME,'note-content')

div1_bs= BeautifulSoup(div1.get_attribute('outerHTML'), "html.parser")

for i in div1_bs.find_all(name='a',id='hash-tag'):

i['href'] =''

i['class']=''

i['target']=''

div1_bs.find(name='div',class_='bottom-container').extract()

inner_imgs=div1_bs.find_all(name='img')

for i in inner_imgs:

i.extract()

content=content+str(div1_bs)

#print('content2:', content)

content=shanlaji(content)

#print('content3:', content)

# rcgz

if int(res['wangzhan_id'])==58:

conn_wangzhan = pymysql.connect(

# host='192.168.102.1',

host='32222222',

user='232332',

password='3223',

database='232332',

charset='utf8mb4')

cursor_wangzhan = conn_wangzhan.cursor(cursor=pymysql.cursors.DictCursor)

sql = f'''insert into article (title,content,time,fid,zuozhe) values("{escape_string(res['title'])}","{escape_string(shanlaji(str(content)))}","{int(time.time())}","{res['fid']}","{'小红书_'+res['gzh']}")'''

cursor_wangzhan.execute(sql)

conn_wangzhan.commit()

print('■■■■■■■■■■■■■■■■■■■■■■■■■■ rcgz 插入数据库成功')

sql = f'''update xiaohongshu_url set status= 1 where id ={res['id']}'''

cursor_weixin.execute(sql)

conn_weixin.commit()

print('更新状态成功')

conn_wangzhan.close()

cursor_wangzhan.close()

cursor_weixin.close()

conn_weixin.close()

#print('over')

while 1 > 0:

page()

time.sleep(8)

本站文章除注明转载/出处外,均为本站原创或翻译。若要转载请务必注明出处,尊重他人劳动成果共创和谐网络环境。

转载请注明 : 文章转载自 » 纵马网 » Python笔记 » Python的selenium利用Chrome的debugger模式采集小红书详情页

上一篇:Python的selenium利用Chrome的debugger模式采集小红书列表页 (2024-03-01)

下一篇:招聘参数排序 (2024-03-03)